Vibe Coding Part 2: National Parks Tracking App with ChatGPT

For my next vibe coding adventure, I built a web app for tracking visits to U.S. national parks. Part 1 is here.

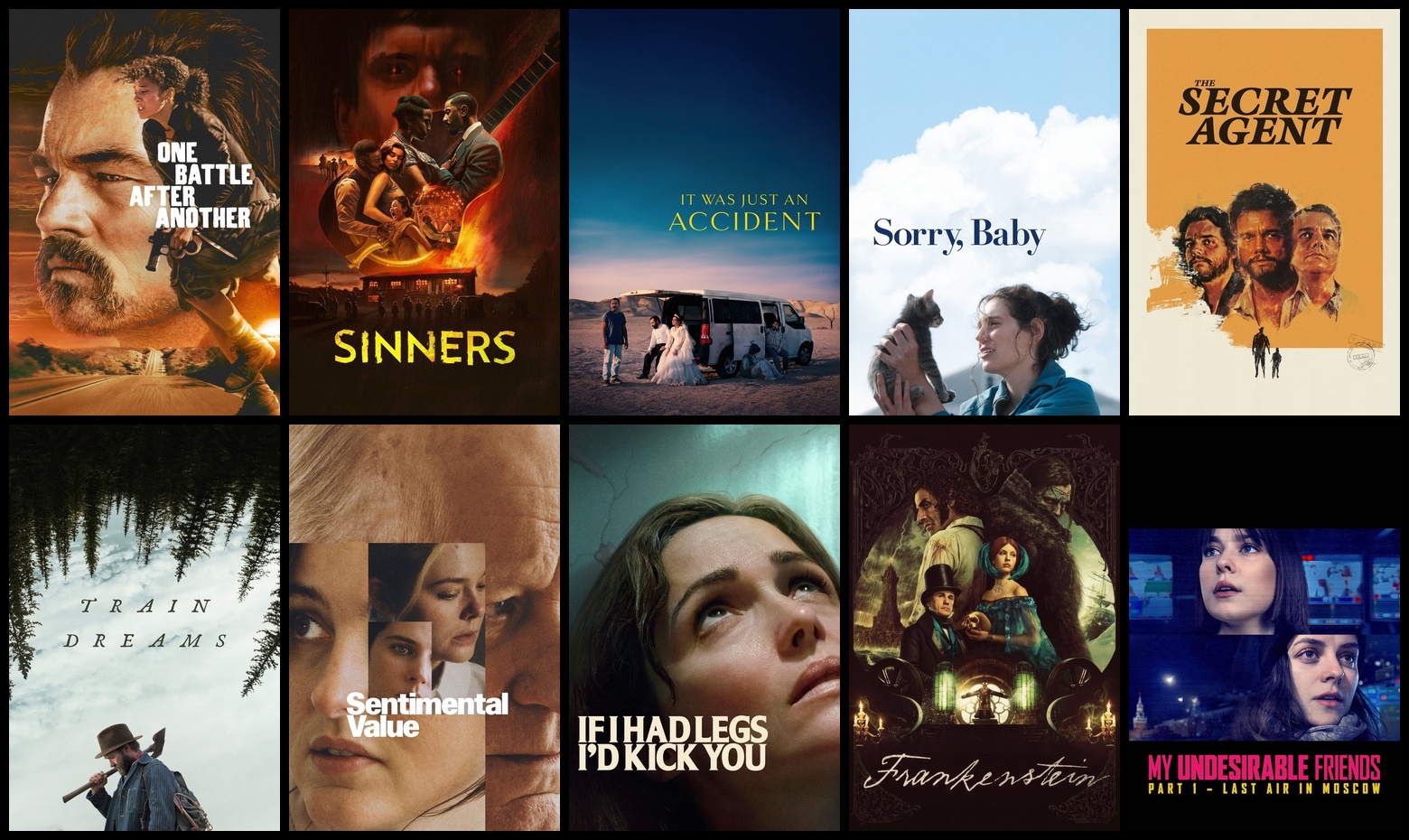

National Parks explorer and tracker

National Parks explorer and tracker

The start of the project was very similar: I used prompts to have ChatGPT (GPT-4-turbo model, with updates as of May 2025) interview me to build a specification, then fed the specification back into it to build a blueprint (a detailed development iteration plan), a list of prompts to use, and a to-do list to mark things off as we went. These files went straight into my Git repo under /docs.

Instead of building in a dedicated AI environment like Windsurf, I used a custom GPT to which I fed the necessary context. This would be my programming partner for the next few weeks. I still tried to follow the same rough guidelines: I would only write my own code as a last resort, relying exclusively on output from the AI. The AI was instructed to build the app in a test-driven manner—partly because this is just better in the long run, and partly because it would help me better understand what it was trying to do.

My custom parks builder GPT

My custom parks builder GPT

There’s a big difference between using this approach and using Windsurf: the GPT has to be constantly reminded of the necessary context to do its work. It might remember the code it wrote for a route two or three prompts ago, but it would usually miss some key details. Example: all NPS parks have a unique identifier code (Acadia is acad, and as you can see, it is part of the permalink URL). I originally named the attribute park_code but eventually changed it to code. The GPT, for quite a while, kept referring to the attribute as park_code unless I re-shared the model with the actual schema.

In Windsurf, the files that comprise the app are available for the AI to scan and review whenever it needs to. You ask it to enhance a model, and it will just go find the model, inspect it, and do its work. Like magic!

I’m not sure how much I like this magical thinking and acting. This parks app is one that I want to actually host on my own server and use indefinitely. That means it isn’t a throwaway, use-for-a-bit-then-discard type app. By having GPT tell me the code to add and where to put it, my understanding of the codebase is much higher than with my golf coaching app. Me being in the loop also helped me catch some problems. I have a lot of experience with Python, Flask, and Pytest: when it did strange stuff, I had to revisit my own knowledge and either fix what it suggested or realize that I needed to learn some new techniques.

For example, GPT extensively used Flask blueprints for the app, which I had only a cursory understanding of. The app is better off for having this architecture, and I’ve learned a lot along the way.

In another case, even though I instructed GPT to use htmx and minimal JavaScript for dynamic in-place editing, it would still revert to classic AJAX-style JavaScript and JSON. Still, it knows htmx and would always do a fine job of rectifying problems like this once I noticed them.

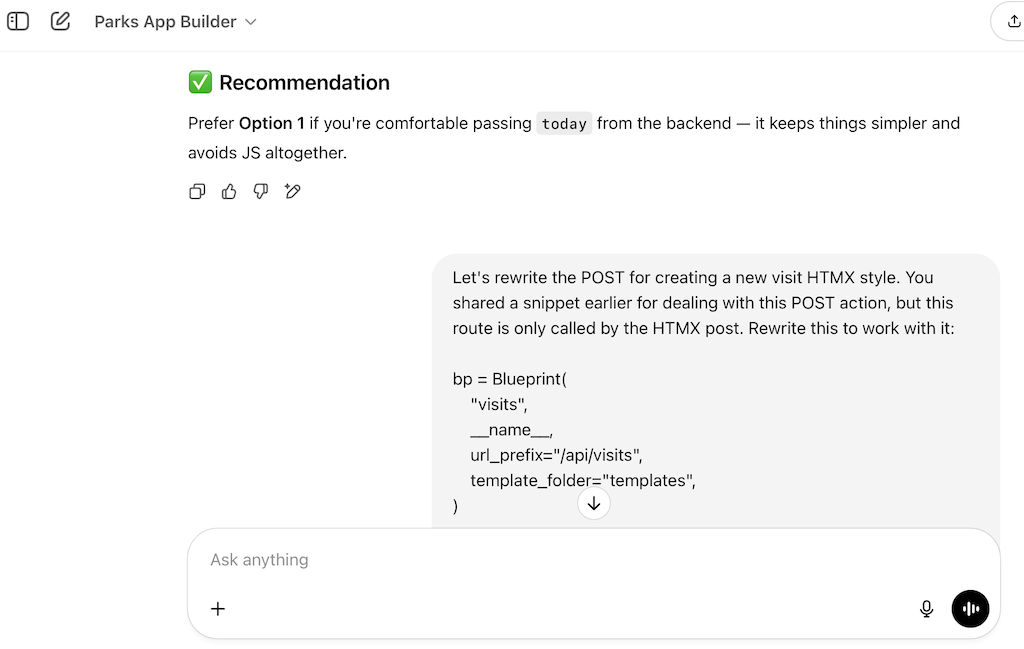

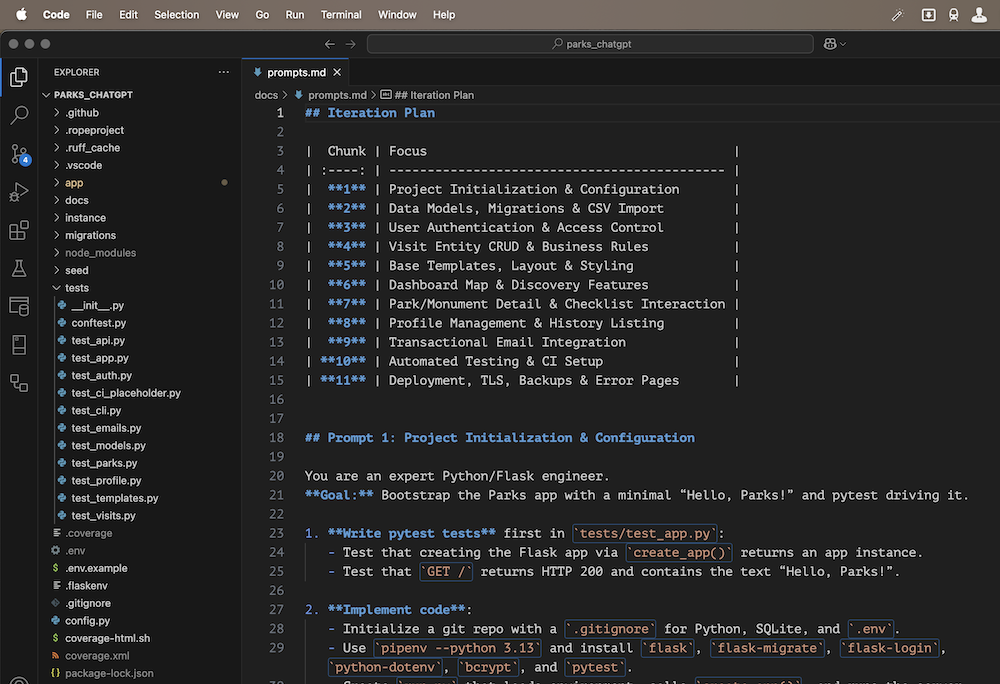

VS Code with my project

VS Code with my project

One interesting aspect of using ChatGPT outside of the IDE is that I also have ChatGPT running inside the editor via Copilot. Copilot mostly leaves you alone when you bulk-paste code in, but it would still step in (sometimes helpfully, sometimes intrusively) as I made small tweaks to the app.

Worth mentioning: Copilot absolutely excels at writing solid Pytest cases. As I worked to improve test coverage, I found it easier to do so in-place in VS Code without invoking the external GPT.

I’ve worked through everything I had in my original spec other than deployment to my hosted server. At this point, I’m using GitHub Issues to manage the project (alongside the amazing GitHub CLI), but still using GPT to guide most of the enhancement work.

I did cheat a little with this app and used Windsurf for a specific challenge. I converted the linting, formatting, and virtual environment management to use the latest Astral tools: uv and ruff. And with the Ruff linting, I went all-in on adherence, including type hints and docstrings. This was after the app was fully developed with ChatGPT. Well, Ruff identified over 700 issues that were going to be a serious chore to fix manually. Enter Windsurf: go and add docstrings and type hints everywhere, periodically running uv run ruff check . to check progress. After about 3 hours of babysitting Windsurf through the process, I had a fully linted and properly formatted solution.